Collection Spotlight: Research AI from Statista

by Stephanie JH McReynolds, Librarian for Business, Management, and Entrepreneurship

Statista is a well-known resource for quick stats and reports. The sources Statista lists can also lead you to organizations and government resources offering additional information and statistics. All this can be discovered by browsing Statista content sections, such as “Industry & Market Reports,” or by using simple keyword searches and then filtering results.

Recently, Statista launched a new tool within the database that goes beyond browsing and keyword searching. Research AI is a generative artificial intelligence tool grounded in Statista data. Prompt Research AI with a question and you will receive a response based on up to 10 of what Research AI deems to be the most relevant sources, which are drawn from all available Statista statistics, infographics, reports, findings from Statista’s Market Insights and more (Statista, 2024c).

“Research AI supports a wide variety of languages,” and the tool will respond in the language used for the prompt (Statista, 2024c). However, the quality of responses “may vary by language due to differences in the amount of training data available” (Statista, 2024c). If the quality of the response is insufficient, Statista recommends using “English due to its extensive training dataset” (Statista, 2024c). Given this context, especially the potential for increased response variance according to language used, it should be noted that the prompts mentioned in this post were all entered in English.

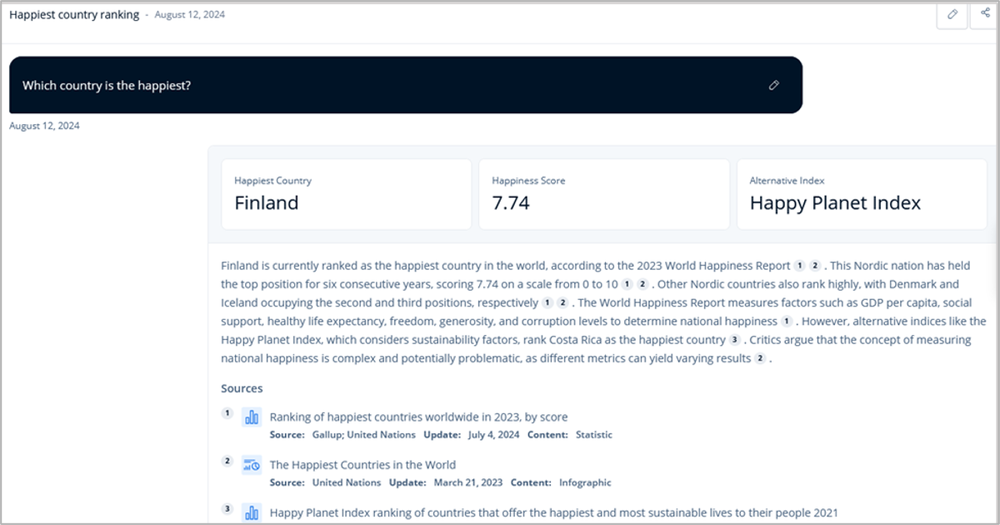

At the top of its response, Research AI features two or three relevant data points. For instance, prompt Research AI with “Which country is the happiest?” and you may see “Happiest Country: Finland,” “Happiness Score: 7.74,” and “Alternative Index: Happy Planet Index” preceding a paragraph long response beginning with, “Finland is currently ranked the happiest country in the world, according to the 2023 World Happiness Report” and ending with the caution, “Critics argue that the concept of measuring national happiness is complex and potentially problematic, as different metrics can yield varying results” (Statista, 2024a).

Each response includes in-text citations and a corresponding list of sources. The date Statista updated a source is included in the list of citations, but this date should not be equated to the period when the underlying data was gathered. You will want to click through to the source to learn more about when the data was collected, while also spending some time reading and considering the source before determining whether it is a relevant and credible piece of data that you would like to include in your research. Click on a cited source in the list to simultaneously view the source and the specific Research AI generated sentence citing that source.

When available, a list of additional Statista sources to explore (beyond those cited in the response) will appear underneath the response in a section labeled “Curated Content Recommendations.” Also below the response is a short list of “Extended Query Recommendations,” which are based on the question asked and response generated. Recommendations for the prompt “Which shampoo has the highest market share in the United States?” might include, “Impact of marketing strategies on market share: What is the impact of different marketing strategies on the market share of shampoo brands in the United States?” (Statista, 2024a). Clicking on a recommendation will generate a Research AI response based on the recommended prompt.

Like all AI tools, Research AI is fallible. Its responses are limited by the data available in the Statista database and the technology used to generate a response. As a reminder of these limitations, this note accompanies each response, “Disclaimer: Our AI can make mistakes. Please check important information” (Statista, 2024a).

Sometimes, you may find that a response omits key information. For example, when prompted to compare paid leave in the United States to other countries, the response highlighted vacation time and parental leave given to fathers, but maternity leave and parental leave given to mothers were not mentioned in the response or included in the listed sources, even though Statista includes this data (Statista, 2024a). Editing the prompt to “paid leave, including maternity leave” shifted the response to one that focused exclusively on maternity and parental leave—vacation time was not included as a type of leave (Statista, 2024a). A further edit that specified the types of leave, “paid leave, including vacation time, parental leave, and maternity leave,” yielded a response that included sources covering vacation time, family leave, and maternity leave (Statista, 2024a).

Likewise, a question about barriers to access to health care based on gender yielded a response that focused only on men and women. A different prompt asking about barriers to health care faced by non-binary individuals yielded a relevant response, showing that Statista includes this data even though the data did not appear in the response given about barriers to health care based on gender (Statista, 2024a).

These examples of editing a prompt (or creating a new prompt) for greater accuracy and specificity demonstrate a type of prompt engineering that is often necessary to yield relevant results in generative AI tools. For more information, see Prompt Engineering on the Libraries’ Artificial Intelligence guide.

You may notice that the exact same prompt in Research AI may yield different responses and different sources. This is to be expected and (along with the above examples of how relevant Statista data may be omitted from a response) serves as a good reminder that Research AI should not be your only research resource. Your research will likely benefit from browsing and running keyword searches within Statista to find additional relevant Statista data, and you will most likely want to explore other Libraries databases and additional research resources as well.

Rather than providing a partial response, Research AI will sometimes respond with, “I apologize, but I do not have enough information from the provided search results to generate a comprehensive and accurate answer,” followed by mentions of related data available via Statista or a note about what type of data would be needed to provide an answer (Statista, 2024a). For instance, a prompt about comparing the quality of women’s health care between three countries resulted in this explanation, “Without specific information about health outcomes, access to care, maternal mortality rates, and other relevant metrics for each country, I cannot make an informed assessment or comparison of women’s health care quality in these nations” (Statista, 2024a).

You can use such responses as encouragement to think further about the type of data you would need to answer the question you asked, which could lead to brainstorming other data and article sources to explore (beyond Statista), while also considering which aspect of your question you would like to focus on and refine as you are conducting your research. For instance, maybe you are most interested in comparing access to health care across the three countries and, as a starting point, you may want to consider additional resources on the Public Health research guide.

If you are a student, before using any generative AI tool (including Research AI) as part of your academic research process, you should make sure you understand your professors’ expectations around the use of these tools. For instance, it is generally not acceptable to use and cite a generative AI tool as a direct source for your academic work (Stawarz, 2024). Some professors may be comfortable with students using certain AI tools as starting points for research that lead to sources students can explore further and cite directly, while other professors may have different expectations. For more information about academic integrity and AI, including an AI tutorial, see the AI for Students page on the Libraries’ Artificial Intelligence guide.

Faculty and graduate students should also be aware that publishers may have policies governing the use of AI throughout the research, writing and publishing process (Stawarz, 2024). For more information about publisher policies, as well as guidance on the use of AI in research and in the classroom, see the Copyright & Intellectual Property and AI for Instructors pages of the Artificial Intelligence guide.

For those interested in the technology behind Research AI, as of this writing, Research AI V1 (the current version of Research AI) uses semantic search, Cohere technology, and the large language model Claude 3 Sonnet to process prompts and generate responses based on data from the Statista database (Statista, 2024c). For more information about the technical details and functionality of Research AI, go to the Research AI overview page within Statista. For a Statista demo of Research AI, see this video. To learn more about generative AI, visit the Libraries’ Artificial Intelligence research guide.

To provide feedback or suggest a title to add to the collection, please complete the Resource Feedback Form.

References

Statista. (2024a). Research AI (V1) [Large language model].

Statista. (2024b). Research AI response to prompt “Which country is the happiest?” [Screenshot].

Statista. (2024c). Welcome back to Research AI.

Stawarz, J. (2024, Aug. 8) Artificial intelligence. Syracuse University Libraries Research Guides.